Insert date into hive tables shows null during select

Category : Hive

When we try to create table on any files(csv or any other format) and load data into hive table then we may see that during select queries it is showing null value.

You can solve it in the following ways:

[saurkuma@m1 ~]$ ll

total 584

-rw-r–r– 1 saurkuma saurkuma 591414 Mar 16 02:31 SalesData01.csv

[saurkuma@m1 ~]$ hive

WARNING: Use “yarn jar” to launch YARN applications.

ivysettings.xml file not found in HIVE_HOME or HIVE_CONF_DIR,file:/usr/hdp/2.3.4.0-3485/hadoop/lib/hadoop-lzo-0.6.0.2.3.4.0-3485-sources.jar!/ivysettings.xml will be used

Logging initialized using configuration in file:/etc/hive/2.3.4.0-3485/0/hive-log4j.properties

hive> show databases;

OK

default

demo

testhive

Time taken: 3.341 seconds, Fetched: 3 row(s)

hive> use demo;

OK

Time taken: 1.24 seconds

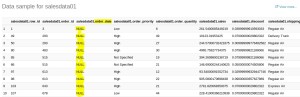

hive> create table salesdata01 (Row_ID INT, Order_ID INT, Order_date String, Order_Priority STRING, Order_Quantity FLOAT, Sales FLOAT, Discount FLOAT, Shipping_Mode STRING, Profit FLOAT, Unit_Price FLOAT) row format delimited fields terminated by ‘,’;

OK

Time taken: 0.782 seconds

hive> select * from salesdata01;

OK

Time taken: 0.721 seconds

hive> load data local inpath ‘/home/saurkuma/SalesData01.csv’ overwrite into table salesdata01;

Loading data to table demo.salesdata01

Table demo.salesdata01 stats: [numFiles=1, totalSize=591414]

OK

Time taken: 1.921 seconds

hive> select * from salesdata01 limit 10;

OK

1 3 13-10-2010 Low 6.0 261.54 0.04 Regular Air -213.25 38.94

49 293 01-10-2012 High 49.0 10123.02 0.07 Delivery Truck 457.81 208.16

50 293 01-10-2012 High 27.0 244.57 0.01 Regular Air 46.71 8.69

80 483 10-07-2011 High 30.0 4965.7593 0.08 Regular Air 1198.97 195.99

85 515 28-08-2010 Not Specified 19.0 394.27 0.08 Regular Air 30.94 21.78

86 515 28-08-2010 Not Specified 21.0 146.69 0.05 Regular Air 4.43 6.64

97 613 17-06-2011 High 12.0 93.54 0.03 Regular Air -54.04 7.3

98 613 17-06-2011 High 22.0 905.08 0.09 Regular Air 127.7 42.76

103 643 24-03-2011 High 21.0 2781.82 0.07 Express Air -695.26 138.14

107 678 26-02-2010 Low 44.0 228.41 0.07 Regular Air -226.36 4.98

Time taken: 0.143 seconds, Fetched: 10 row(s)

hive> select * from salesdata01 where Order_date=’01-10-2012′ limit 10;

OK

49 293 01-10-2012 High 49.0 10123.02 0.07 Delivery Truck 457.81 208.16

50 293 01-10-2012 High 27.0 244.57 0.01 Regular Air 46.71 8.69

3204 22980 01-10-2012 Not Specified 17.0 224.09 0.0 Regular Air -27.92 12.44

3205 22980 01-10-2012 Not Specified 10.0 56.05 0.06 Regular Air -27.73 4.98

2857 20579 01-10-2012 Medium 16.0 1434.086 0.1 Regular Air -26.25 110.99

145 929 01-10-2012 High 21.0 227.66 0.04 Regular Air -100.16 10.97

146 929 01-10-2012 High 39.0 84.33 0.04 Regular Air -64.29 2.08

859 6150 01-10-2012 Critical 38.0 191.14 0.06 Regular Air 82.65 4.98

Time taken: 0.506 seconds, Fetched: 8 row(s)

hive> select Row_ID, cast(to_date(from_unixtime(unix_timestamp(Order_date, ‘dd-MM-yyyy’))) as date) from salesdata01 limit 10;

OK

1 2010-10-13

49 2012-10-01

50 2012-10-01

80 2011-07-10

85 2010-08-28

86 2010-08-28

97 2011-06-17

98 2011-06-17

103 2011-03-24

107 2010-02-26

hive> select Row_ID, from_unixtime(unix_timestamp(Order_date, ‘dd-MM-yyyy’),’yyyy-MM-dd’) from salesdata01 limit 10;

OK

1 2010-10-13

49 2012-10-01

50 2012-10-01

80 2011-07-10

85 2010-08-28

86 2010-08-28

97 2011-06-17

98 2011-06-17

103 2011-03-24

107 2010-02-26

Time taken: 0.157 seconds, Fetched: 10 row(s)

hive> select Row_ID, from_unixtime(unix_timestamp(Order_date, ‘dd-MM-yyyy’)) from salesdata01 limit 10;

OK

1 2010-10-13 00:00:00

49 2012-10-01 00:00:00

50 2012-10-01 00:00:00

80 2011-07-10 00:00:00

85 2010-08-28 00:00:00

86 2010-08-28 00:00:00

97 2011-06-17 00:00:00

98 2011-06-17 00:00:00

103 2011-03-24 00:00:00

107 2010-02-26 00:00:00

Time taken: 0.09 seconds, Fetched: 10 row(s)

hive> select Row_ID, from_unixtime(unix_timestamp(Order_date, ‘dd-MM-yyyy’),’dd-MM-yyyy’) from salesdata01 limit 10;

OK

1 13-10-2010

49 01-10-2012

50 01-10-2012

80 10-07-2011

85 28-08-2010

86 28-08-2010

97 17-06-2011

98 17-06-2011

103 24-03-2011

107 26-02-2010

Another example:

If you are trying to store the date and timestamp values in timestamp column using hive.The source file contain the values of date or sometimes timestamps.

Sample Data:

[saurkuma@m1 ~]$ cat sample.txt

1,2015-04-15 00:00:00

2,2015-04-16 00:00:00

3,2015-04-17

hive> create table table1 (id int,tsstr string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ‘,’LINES TERMINATED BY ‘\n’;

OK

Time taken: 0.241 seconds

hive> LOAD DATA LOCAL INPATH ‘/home/saurkuma/sample.txt’ INTO TABLE table1;

Loading data to table demo.table1

Table demo.table1 stats: [numFiles=1, totalSize=57]

OK

Time taken: 0.855 seconds

hive> select * from table1;

OK

1 2015-04-15 00:00:00

2 2015-04-16 00:00:00

3 2015-04-17

Time taken: 0.097 seconds, Fetched: 3 row(s)

hive> create table table2 (id int,mytimestamp timestamp) ROW FORMAT DELIMITED FIELDS TERMINATED BY ‘,’ LINES TERMINATED BY ‘\n’;

OK

Time taken: 0.24 seconds

hive> INSERT INTO TABLE table2 select id,if(length(tsstr) > 10, tsstr, concat(tsstr,’ 00:00:00′)) from table1;

Query ID = saurkuma_20170316032711_63d9129a-38c1-4ae8-89f4-e158218d2587

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there’s no reduce operator

Starting Job = job_1489644687414_0001, Tracking URL = http://m2.hdp22:8088/proxy/application_1489644687414_0001/

Kill Command = /usr/hdp/2.3.4.0-3485/hadoop/bin/hadoop job -kill job_1489644687414_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2017-03-16 03:27:36,290 Stage-1 map = 0%, reduce = 0%

2017-03-16 03:27:55,806 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.89 sec

MapReduce Total cumulative CPU time: 1 seconds 890 msec

Ended Job = job_1489644687414_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://TESTHA/apps/hive/warehouse/demo.db/table2/.hive-staging_hive_2017-03-16_03-27-11_740_404528501642205352-1/-ext-10000

Loading data to table demo.table2

Table demo.table2 stats: [numFiles=1, numRows=3, totalSize=66, rawDataSize=63]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 1.89 sec HDFS Read: 4318 HDFS Write: 133 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 890 msec

OK

Time taken: 47.687 seconds

hive> select * from table2;

OK

1 2015-04-15 00:00:00

2 2015-04-16 00:00:00

3 2015-04-17 00:00:00

Time taken: 0.119 seconds, Fetched: 3 row(s)

I hope this helped you to solve your problem and feel free to give your valuable feedback or suggestions.